1.

It is not appropriate to ask too much about the dilemma outlined below. The situation described has the nature of a thought experiment. Ruwen Ogien described the scope and limits of thought experiments, these “little fictions, specially devised in order to arouse moral perplexity,” in his words (2). He adds that “they allow us to identify more clearly the factors that influence our moral judgments.” As for thought experiments in ethics, their “ultimate aim” is “to know if there are reasons to keep [reality] as it is or to change it.” The “dilemma – thought experiment” that we propose (and that may have already been proposed, which we do not know at the time of writing this post) aims at assessing the role of emotions in the decision that could be made by the algorithm of a fully autonomous future vehicle. The dilemma includes two situations.

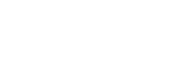

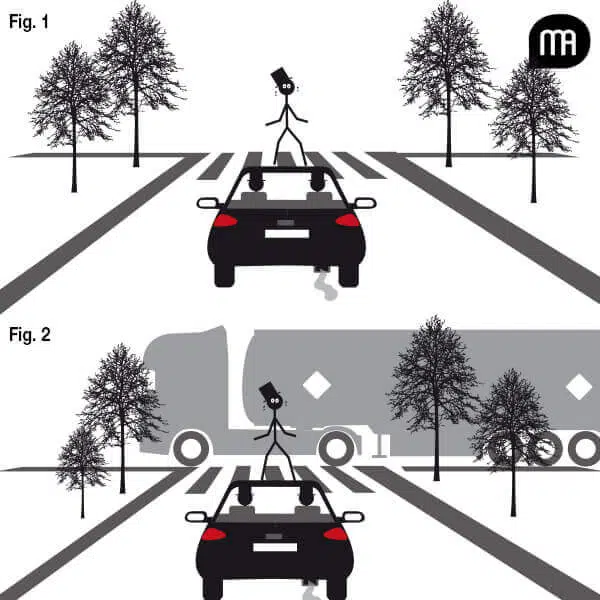

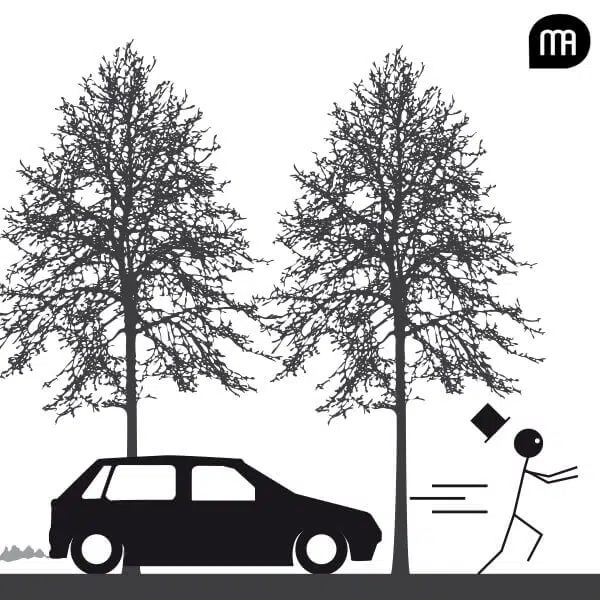

In the first case, an autonomous car (AV) with defective brakes is driving towards a crossroads (see Figure 1). It contains two passengers. A pedestrian crosses the road a short distance ahead, which he is allowed to do since the traffic light is red. Neither of the two AV passengers has the opportunity to take control of the vehicle, and even if they did, there would not be enough time to act. The AV autopilot thus faces the following dilemma: either it does not change its direction and the pedestrian will be hit by the vehicle, or it hijacks the vehicle and, in this case, it is very likely that its passengers will die because the vehicle will hit a wall. Importantly, the pedestrian notices that the vehicle is heading towards him and will hit him. Paralysed by the situation, he is seized with fear and his face expresses an emotion of fear. The object of his fear is the fact that he will suffer bodily harm or lose his life. The second situation is identical to the first one, with the exception of one feature: a tanker truck driving on the other lane leading to the intersection, which is allowed to drive since the traffic light is green, is about to pass at pedestrian level (see Figure 2). If the autonomous car continues on its way, it will first hit the pedestrian, then the tanker truck. This will result in damage to the truck driver, the two vehicle passengers and others in the vicinity of the accident site – just to give ourselves an idea, a total of about 20 people are affected by the situation. Given the configuration of the site, the AV sensors are unable to detect the passage of the tanker truck. However, the pedestrian had time to understand the situation. He understands that if the AV continues on its way, the consequences will be disastrous not only for him, but also for all the persons concerned. Paralysed by the situation, he is seized with fear and his face expresses an emotion of fear. But, unlike the first situation, the object of his fear is the fact that he and many others will suffer bodily harm or lose their lives.

2.

Suppose that the AV is able to recognize the facial expressions of human beings in its environment. Could it distinguish the emotion of fear from the pedestrian who, in the first situation, is only concerned with the preservation of his physical integrity or life, while in the second situation, it is concerned with the preservation of a large number of lives? The difference between the signals sent by the pedestrians would be clearer if the pedestrian’s emotion in the tanker truck situation could be translated into the request: “Spare us!,” while the pedestrian’s request in the first situation would be: “Spare me!” (This would be even more obvious if the requests were respectively: “Spare us, there are 20 of us at the crossroads!” and “Spare me, even if I’m alone at the crossroads!”) But the second pedestrian’s facial expression for fear cannot express the content of the proposal that he would communicate through language if he had the opportunity. From a technical point of view, the question is based on the AV’s ability to grasp that the emotion of fear of the second pedestrian communicates a signal that the emotion of fear of the first pedestrian does not. But since AV cannot “perceive” the arrival of the tanker truck, it has, it can be assumed, no “reason” to distinguish a signal in the expression of fear from the second pedestrian. In addition, passengers would also express fear. Their emotions, like those of each of the protagonists realising the horror of the situation, would seem somehow normal, even reasonable. In his book on the rationality of emotions, Ronald de Sousa discussed this question:

“We often speak of a particular emotion as ‘reasonable.’ What this means is not obvious. Sometimes it seems to mean nothing more than ‘I might feel this way too under similar circumstances.’ At other times it reflects broader but equally inarticulate conventional standards: ‘It’s normal to feel this way.’ Sometimes it is equivalent to ‘appropriate.’” (3)

From his discussion it emerges that the idea that reasonableness could be equivalent to normality (“It is normal to feel that way”) suggests that the AV algorithm could “consider” that it is normal for each of the protagonists in an accident situation to feel fear. As a result, there is no reason why the fear of one should appear more morally relevant to the AV than the fear of another. Therefore, the AV should not seek to give special meaning to the pedestrian’s emotion of the tanker truck situation. Faced with the tragic choice before it, the AV should choose, from a utilitarian perspective, to sacrifice the pedestrian in both situations.

3.

What would a sentimentalist ethics say about this deadlock? According to Michael Slote, such an ethics “is the view that moral distinctions and motivations derive from emotion or sentiment rather than (practical) reason” (4). For the sake of efficiency, we propose four statements consistent with a sentimentalist ethics, three of which being related to the virtue ethics – a normative moral theory whose theses do not perfectly cover those of sentimentalist ethics but some versions of which are described (by Slote) as “virtue-ethical sentimentalism.” We mention the autonomous vehicle in these statements as if it had moral agency:

(i) Emotions communicate information and they “infuse” themselves out of sympathy. (5)

(ii) AV should demonstrate a “reliable sensitivity” to the normative requirements imposed by a given situation. (6)

(iii) AV should “have the right emotions, on the right occasions, to the right extent, towards the right people or objects.” (7)

(iv) AV should behave as a virtuous person would.

Much space would be required to discuss the content and relevance of these statements. In particular, number (iv) would require an artificial intelligence able to recognise the moral goods involved in accident situations (absence of harm, self-preservation, respect for others) and exercise the virtues that make it possible to realise these goods. As for statement (iii), it can only be meaningful if it is interpreted (again) in a metaphorical sense, since the autonomous car can only mimic the emotions appropriate to the situation. However, if the AV is replaced by a real flesh-and-blood person, points (i) to (iv), especially (ii) and (iii), seem to correspond to legitimate expectations of human beings faced with moral choices.

4.

Hence the following question: can we agree to delegate full decision-making power to algorithms (those of future fully autonomous vehicles) that would not take into account the emotions experienced by human beings – i.e., to use the moral dilemma described above, that would not have a “reliable sensitivity” to make distinctions between emotional signals? Shouldn’t these algorithms be required to behave as moral agents with (metaphorically) virtuous character traits and the ability to experience, perceive and interpret emotions? If emotions are morally significant, then the moral algorithms of future fully autonomous cars should take them into account. Even fear – an apparently instinctive, non-rational emotion that we share with other animals and the function of which is to signal a danger and invite us to flee it – has a rational component in the sense that it allows us to judge well (8). In any case, this is what the thought experiment described in Section 1 sought to illustrate. Perhaps it will, in Ruwen Ogien’s words, create moral perplexity. Alain Anquetil (1) See my article of 23 November 2018. (2) R. Ogien, L’influence de l’odeur des croissants chauds sur la bonté humaine et autres questions de philosophie morale expérimentale, Paris, Editions Grasset & Fasquelle, 2011. Tr. M. Thom, Human kindness and the smell of warm croissants: an introduction to ethics, New York, Columbia University Press, 2015. (3) R. de Sousa, The rationality of emotion, The Massachusetts Institute of Technology, 1987. (4) M. Slote, “Virtue ethics and moral sentimentalism,” in S. van Hooft (ed.), The handbook of virtue ethics, Acumen Publishing Limited, 2014. (5) Expression inspired by David Hume (“When any affection is infused by sympathy…”), Treatise of Human Nature, Book II – Of The Passions, L. A. Selby-Bigge (1896 ed.), 1739. (6) Expression used by John McDowell in his article “Virtue and reason,” The Monist, 62(3), 1979, pp. 331-350. He states that “a kind person has a reliable sensitivity to a certain sort of requirement that situations impose on behaviour.” (7) R. Hursthouse, On virtue ethics, Oxford University Press, 1999. (8) See Hursthouse, op. cit. [cite]